Give the answer first: at present, artificial intelligence can’t replace human beings at all, nor can it have real wisdom.

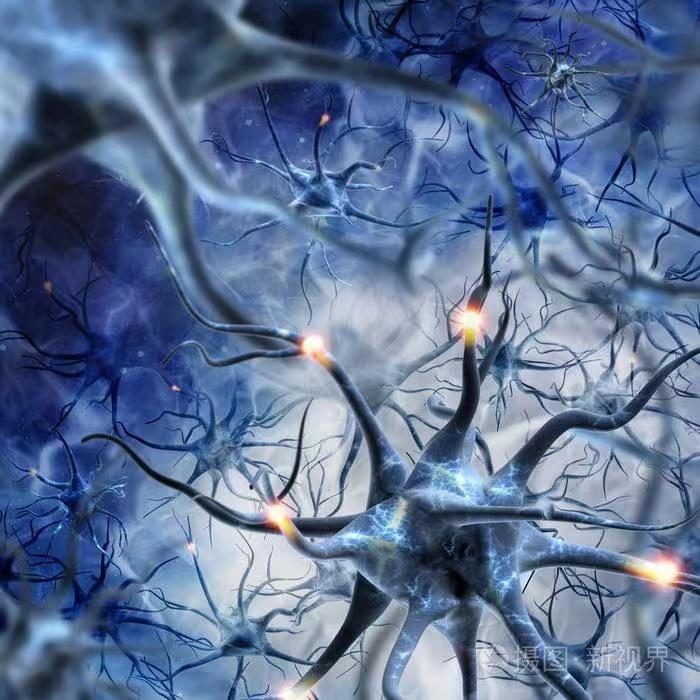

There are 100 billion neurons in the human brain. Why do humans have wisdom? According to scientists’ conclusion, because the number of neurons in human brain has exceeded a limit, wisdom has emerged.

ChatGPT has 175 billion neuron parameters. If you keep training, will ChatGPT have real wisdom? Or continue to increase the parameters, for example, to 1 trillion parameters, will ChatGPT have real wisdom?

The answer is no.

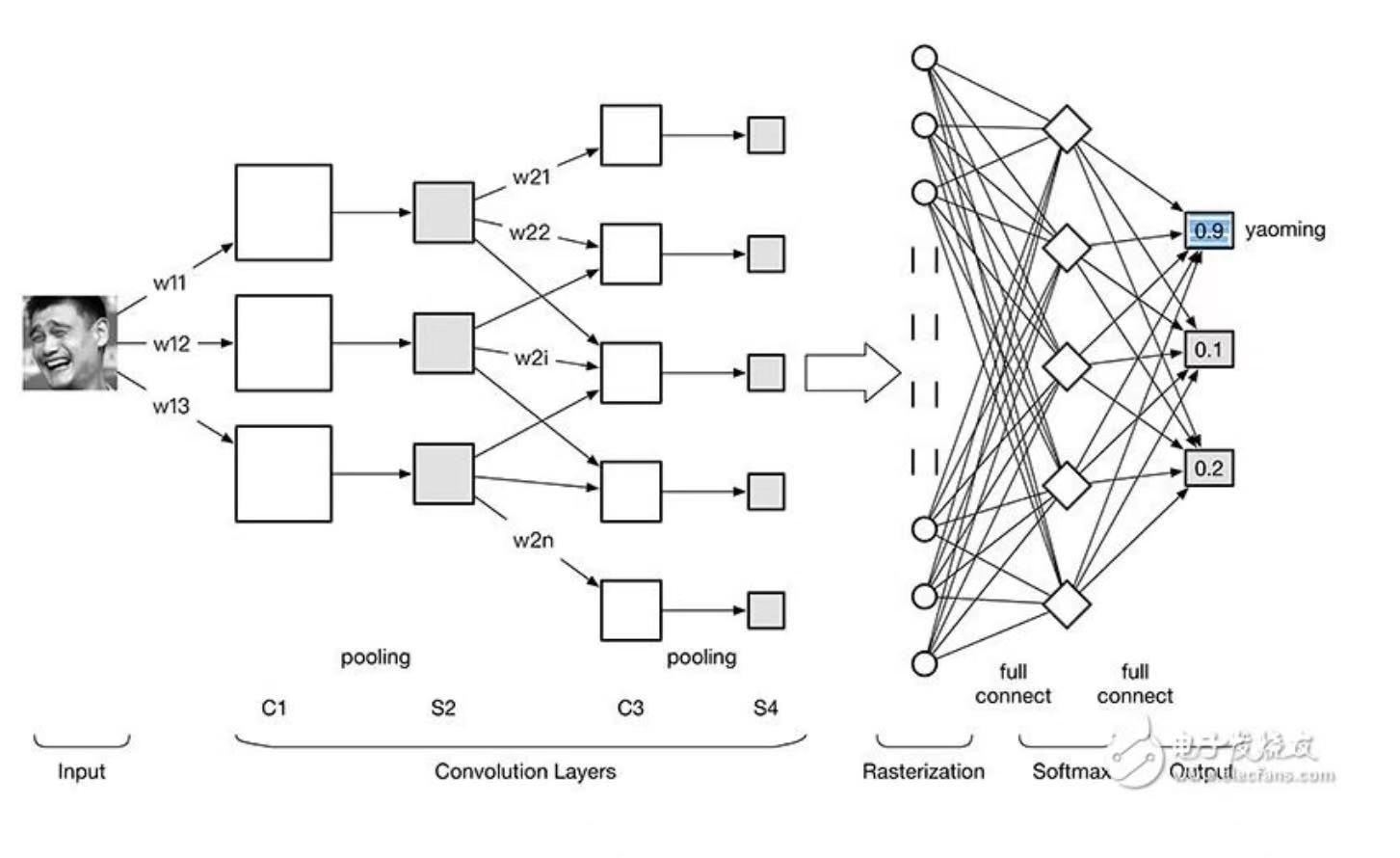

Although ChatGPT, an artificial intelligence based on convolutional neural network, simulates human neurons in its underlying mechanism, one parameter is equivalent to a human neuron. But the way of computer neuron simulation is very simple and crude. In addition to the values of 0 and 1, there are also phase values between human neurons. The neurons simulated by computer have only the values of 0 and 1, but there is no phase value. The amount of data transmitted by computer neurons may be only one tenth to one percent of that transmitted by human neurons.

Moreover, the links between human neurons are very complicated. Apart from horizontal links, there are vertical links. There are 100 billion neurons in human beings, each neuron has more than 1,000 outward links, and there are about 100 trillion synapse between nerves. Computer simulation of the links between neurons is extremely simple, only related to the upper and lower levels.

Therefore, even if the current artificial intelligence based on convolutional neural network increases neuron parameters, it is impossible to change from quantitative change to qualitative change and become truly intelligent.

Neural network of human brain;

Computer convolutional neural network;

The above is from a micro point of view, from a macro point of view, ChatGPT is so powerful, in fact, TA only has inductive ability, but does not have reasoning ability. That is to say, in theory, TA doesn’t understand what we humans train TA for. TA just remembers the law, knows the problem, and that’s how to solve it, and doesn’t understand what the problem is.

For example, artificial intelligence can’t understand the real addition and mathematical rules. For example, we taught ChatGPT to add, subtract, multiply and divide, but gave ChatGPT four numbers, (1, 1, 6, 2), and let ChatGPT use these four numbers to calculate 24 points by adding, subtracting, multiplying and dividing. Humans have the ability of reasoning, and can calculate 6*(2+1+1)=24, but if ChatGPT has not been taught the calculation method of 24 points and knows addition, subtraction, multiplication and division, TA can’t be calculated.

That is to say, what ChatGPT has been taught can be summarized by TA, and what has not been taught, ChatGPT can’t deduce what it has learned from what it has already learned.

ChatGPT has no reasoning ability, only inductive ability, unlike human beings, which have both inductive ability and reasoning ability, so human beings are creative and have real wisdom.

On the surface, artificial intelligence such as ChatGPT is creative. For example, you can draw a picture with Stable Diffusion, but this is actually not creation, but Stable Diffusion recombines the templates that have been trained to draw countless times before through different patterns.

As far as inductive ability is concerned, ChatGPT can be trained by the whole human knowledge base, and it can become the strongest inductive ability in history, but its reasoning ability is 0.

Personally, I think that as long as human beings exist, they will certainly pursue eternal life tirelessly. There are two ways to pursue immortality. One way is the great development of artificial intelligence. In the future, artificial intelligence will have real wisdom to replace human beings, or human consciousness will be uploaded to computers and become virtual life. In any case, silicon-based life has replaced carbon-based life and become eternal life.

The other way is that we human beings are carbon-based life, and the cells of our bodies can be infinitely updated, so naturally we can live forever. At present, the maximum life span of human beings is 120 years old, which is over 70 years old. Due to the excessive division of human body cells, mistakes will occur and they will become cancer cells. Cancer cells are not controlled by the immune system, encroaching on the resources of the whole body, and eventually human beings will die of systemic failure. In addition, many human cells can’t divide, or divide for a limited number of times, such as nerve cells, which are difficult to update. When people are old, brain cells are necrotic and can’t be updated, Alzheimer’s disease will occur, heart cells can’t be updated, and eventually they will die of heart failure.

In nature, there are many lives and cells that can divide and reproduce indefinitely without making mistakes. For example, HeLa cells have been cultivated for countless generations and are still full of reproductive ability. HeLa cells have actually achieved eternal life. Lighthouse jellyfish, for example, is a reversible creature. If the deep-sea environment of the earth does not change much, and there are accidents, lighthouse jellyfish can live forever and never die.

Why can’t human beings live forever on the basis of carbon-based life? Because the human body is too complicated. Mankind has made great progress in information technology, but it has made slow progress in biotechnology.

Anyway, from the current point of view, no matter which eternal life path, whether artificial intelligence replaces human beings, silicon-based life replaces carbon-based life, or human beings themselves can be infinitely updated and become eternal carbon-based life with wisdom. The goals of these two roads are very, very far away.